티스토리 뷰

Extracting a secret sauce from Meta MovieGen

BayesianBacteria 2024. 10. 17. 23:14Intro

Modern foundation models for image and video generation are often closed-source, with many technical details remaining unavailable to the public—this is especially true for video generation models. (In my view, this closed-source trend is even more pronounced than in the LLM domain.). For example, we know very little, if anything, about the technical specifics behind models like Runway Gen3, LumaLabs, or OpenAI’s Sora. However, thanks to the Meta MovieGen team, a detailed technical report on their work has been made publicly available. In this article, I won’t focus on MovieGen itself, but rather on the know-how it provides: we will explore their recipes for designing and training video (and image) generation models.

Following the structure of the original paper, we will explore (1) their design choices, including architecture, loss functions, and others; (2) dataset construction; (3) training methods, such as training stages and parallelism; and finally(4) inference techniques. Each ‘recipe’ contains only the bullet points, with details excluded for simplicity. The aim is to provide a high-level overview of the key aspects without diving into technical specifics.

Design choices: architecture, loss-functions, and others

Common

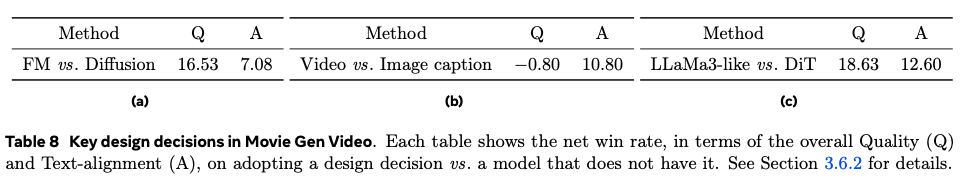

- Use flow-matching (ensure "zero terminal SNR"---pure-gaussian noise input is trained), the rising star, (maybe) better alternatives of Diffusion.

- use $\sigma_{min}=1e^{-5}$, not zero unlike SD3

Temporal VAE

- Use Spatio-temporal autoencoder, initialized by spatial (commonly-used) VAE

- Jointly train them. in a ratio of 1 batch of images to 3 batches of videos.

- More channels in a latent space

- impose "Outlier penalty loss" -> to remove "high-norm" meaningless pixels of decoded image.

- (Opinion) This can be replaced with the register tokens which aims to alleviate "high-norm" tokens of the vision transformer.

- Tilling for efficient inference

- train with variable length of videos

Main transformer backbone architecture

On their philosophy for the architecture design:

"We intentionally keep the design of our backbone simple and similar to LLMs, specifically LLaMa3. This design choice allows us scale the model size and training, as discussed in ..."

- Guess: they might want to leverage their infrastructure and AI-framework, optimized to the LLaMa-family, rather than exploring the T2I or T2V suitable architecture.

- Furthermore, they found that their simple architecture works better than specialized blocks while being more stable to train.

- patching via 3D conv, but not compress temporal dimension (like OpenSora or others)

- learnable positional embedding for arbitrary size, aspect ratio and video length (from the NeurIPS2024-submitted architecture NaViT)

- LLama3-like transformer architecture: RMSNorm, SwiGLU

- Cross-attention for text conditioning

- use multiple text encoders (rationale of the text-encoders are interesting)

- Not causal attention unlike original LLama3

Text encoder choice and its rationale

- Use 3 encoders: UL2, ByT5, and MetaCLIP. Interestingly, their model-choice has specific reasons.

- MetaCLIP is obtained by finetuning the CLIP to increase token-length 77 to 256. MetaCLIP is specialized in "text-image-alignment"

- ByT5 is byte-level text-encoder. Therefore, it is specialized in supporting "generating characters". (e.g. the prompt A developer holding a sign that says 'I need a job')

- UL2 is for long-sentence understanding.

- (FPS controling with input text) they control the FPS (Frame-per-second) of the video with the input text prompt: during pre-training, the text prompt includes "FPS-16", "FPS-XX"

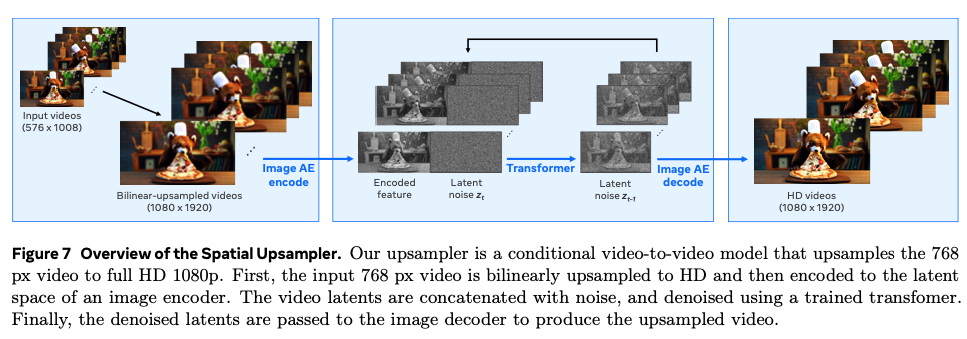

Spatial upsampling

- Train a new super-resolution transformer, but smaller one (e.g. original: 30B, here: 7B)

- transformer trained with only spatial computation (there is no temporal-attention)

- input channel is doubled, due to low-resolution frame inputs.

- (Improving temporal consistency with Multi-Diffusion, not with temporal-attention) they utilize the Multi-Diffusion (training-free, applied at inference time) to ensure consistency between upsampled-frames.

Dataset

- they use a lot of visual filtering, like above.

- they even remove videos with excessive text with a video OCR model. why?

- scene boundary detection is done by FFmpeg

- with aspect ratio filtering, they achieve a mix of 60% landscape and 40% portrait videos

- remove first few seconds of clips which usually contain unstalble camera movement or transition effects

- they filter out low motion videos

- remove "perceptually duplicate clips" via similarity in a copy-detection embedding space (feature space specialized in de-duplication)

- of course, as like many other text-to-something model development, they generate synthetic captions.

- at least 60% of their high-resolution training set contains humans

- bucketing their training dataset by different aspect ratios and video durations (5 buckets)

Training

Towards model scaling and training efficiency

- they have well-organized, optimized, scaled architecture. yes.

- use vanilla Multi-head-attention, not use Grouped-Query-Attention since the video generation does not need a merit of it: auto-regressive generation with causal mask.

- like other video model training, multi-stage training is used: stage 1 for image-only training, stage 2 for low-resolution videos, stage 3 for high-resolution videos...

- the majority of training budget is long-context high-resolution video training.

On the parallelism

- they use complex multiple parallelism for efficient training : Tensor-parallelism, sequence-parallelism, context-paralleism, fully-sharded data parallel

- the fundamental aim of using different parallelism for each transformer components is reducing communication of machines

- for instance, layer-norm treats sequence dimension independently. So, apply the sequence parallelism.

- they have done this with just "pytorch" and compiled into CUDAGraphs not with DeepSpeed or others. How?

Fine-tuning

- (Dataset 1) they focus on balancing the concepts in set of videos from their fine-tuning dataset.

- it is done by deduplication with k-NN on text feature space.

- the details of dataset pipeline is described at "Dataset" in this article.

- (Dataset 2) they manually identify cinematic videos and again manually caption the videos for subset of high-quality video data

- (Model averaging) they average model checkpoints obtained from SFT experiments that use various version of finetune data, hyperparameters and pre-train checkpoints.

Comments on model averaging

Model averaging has a rich history in improving generalization in machine learning. For instance, SWA(Stochastic weight averaging), EMA(Exponential moving average, with Adam), ModelSoup, merging LoRA weights and its variants, and so on.

In modern deep learning, some of these approaches are still actively applied: for instance, EMA in diffusion models and LoRA weight merging for large language models. MovieGen demonstrates additional possibilities for leveraging these techniques. They average the more "diverse" weights with different dataset, hyperparameters and even pre-train checkpoint.

In ensemble and Bayesian deep learning, the diversity of the ‘merged’ (or marginalized) models often leads to better generalization, such as models with weights in different basins of the weight space. I believe ‘diversity’ is also a key factor in model averaging, even though the mechanism of weight averaging is not identical to that in Bayesian methods.

Learning-rate

- unlike previous public T2I report, they consecutively adjust their learning rate.

- they reduce learning rate by half at some point, which further reduces the loss.

- decrease the learning rate whenever the validation loss plateaus

- This kind of ‘learning-rate’ engineering used to be Deep Learning 101, but in modern training setups (such as LLMs and Diffusion models), it is often overlooked. It serves as a reminder of the importance of this kind of ‘basic engineering,’ even if it seems a bit tedious.

- Additionally, the validation loss of flow-matching loss is highly correlated with the quality of images or videos according to reports from StableDiffusion 3 and MovieGen.

Inference

- they utilize inference prompt rewrite on the input text prompt

- finetune the another 8B LLaMa3 model specialized in the prompt rewriting, with human-in-the-loop (generate LLaMa3 70B, and select the high-quality rewrite pairs)

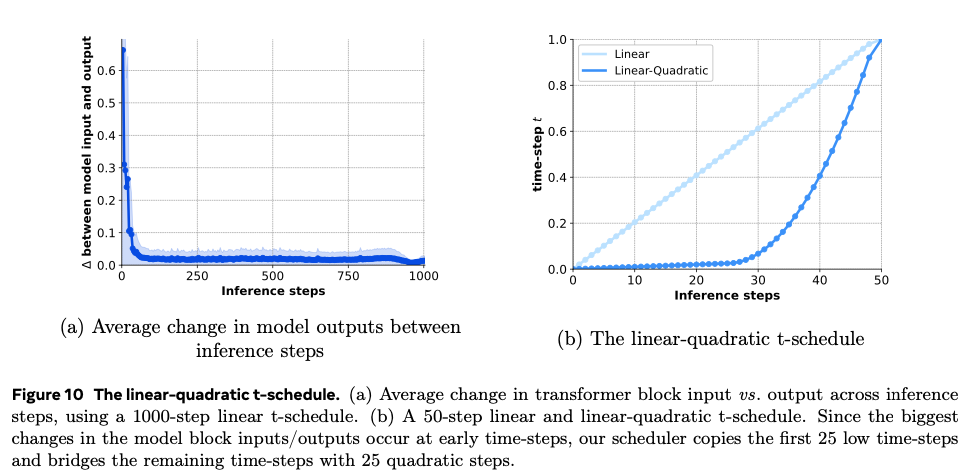

- they use "linear-quadratic t-schedule" focusing on the initial timesteps of flow-matching ODE, since the difference between model input and output is high at the initial step (they might try to balance it)

- (Q) is this the common phenomenon of the flow-matching models?

- if not, we can design more suitable timestep schedule of our own model based on creating a timestep schedule that focuses on areas with greater variation.

- this linear quadratic schedule can significantly reduce the number of steps to generate high-quality videos.

- (Observation 1) they found that simple Euler ODE solver outperforms higher-order solvers.

- (Observation 2) Video generation is more sensitive to the number of inference steps compared to image generation (the higher number the more significant the improvement)

To conclude

We have distilled the essential components of MovieGen, focusing on its core text-to-video backbone model. While it’s clear that training such a massive video generation model is not feasible for us, even with full knowledge of its underlying mechanisms (and I believe we don’t have all the details), my goal was to gain meaningful insights into the creation of text-to-something foundation models and to learn from their trial and error.

The original technical reports provide detailed applications of the text-to-video backbone, including personalized generation, video editing through instructions, and even sound generation corresponding to the videos. For more amazing results, check out the original paper and their youtube playlist.

'Showing off studying ML > ML - 특집' 카테고리의 다른 글

| HiRA: parameter efficient Hadamard high rank adaptation for Large Language Models (0) | 2025.02.11 |

|---|---|

| Vision transformers need registers (1) | 2024.08.23 |

| 메타분석 시리즈: Loss re-weighting before and after the era of LLMs (2) | 2024.08.13 |

| Improving Transformers with Dynamically Composable Multi-Head Attention (0) | 2024.07.09 |

| Komolgorov-Arnold Network (KAN) (1) | 2024.06.11 |

- Total

- Today

- Yesterday

- diffusion

- 몽중식

- finetuning

- deeplearning4science

- vscode

- Theme

- 이문설농탕

- DeepLearning

- ICML

- generativemodeling

- icml2024

- Transformer

- domaingeneralization

- loss-weighting

- LLM

- MachineLearning

- flowmatching

- multiheadattention

- 프렌밀리

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |